Time Series Data with InfluxDB and Grafana

On the face of it, the collection of metrics can appear deceptively simple - after all, they consist of simple types such as labels, numbers and timestamps. When modern distributed systems generate tens of billions of records per day though, the picture becomes much more complex. That data needs to be ingested, stored and analysed at high speed. This can result in huge storage costs as well as requiring massive computational power. To meet these challenges, a number of dedicated time series databases have appeared on the market in recent years. One of the most popular of these is InfluxDB. InfluxDB is something of a veteran - having been around in one form or another for over 10 years - and now boasts over 500,000 active users. It promises high performance and scalability at a competitive price.

InfluxDB is open source and is available in both cloud and self-hosted versions. In this article we will look at

- setting up a simple InfluxBb instance

- formatting and ingesting a metrics file

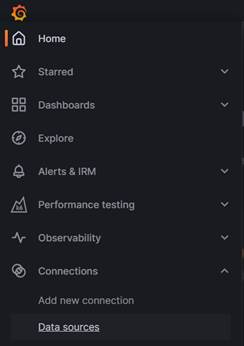

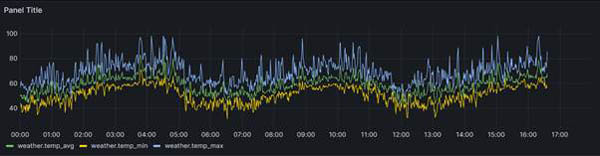

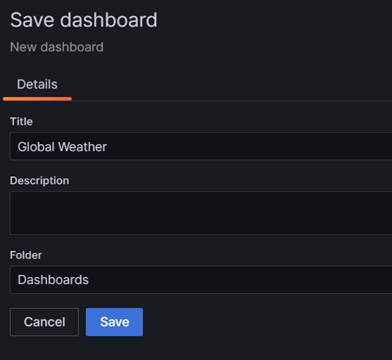

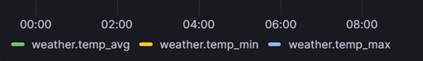

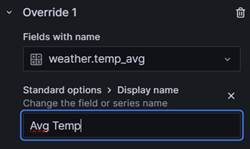

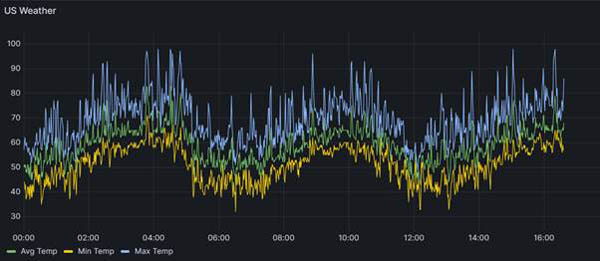

- visualizing the data in Grafana

InfluxDB Cloud Serverless

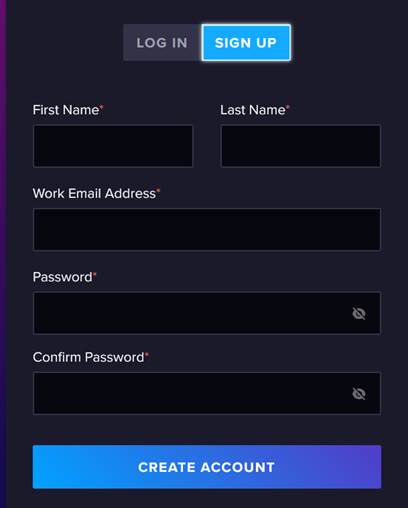

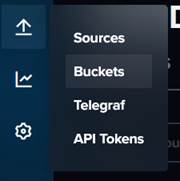

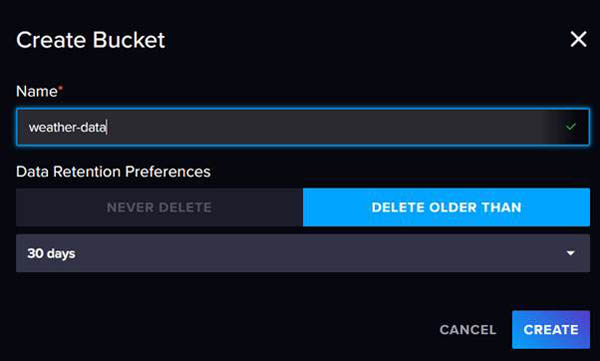

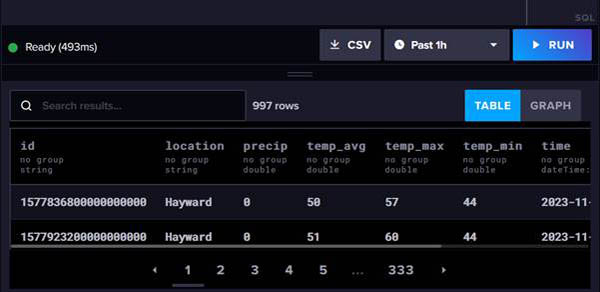

We are going to be using the InfluxDb Cloud Serverless tier. This tier provides us with an allocation of two "buckets" - which are synonymous to databases. In addition to this limitation, the buckets only have a maximum retention period of 30 days. As this is a time series database the term 'retention period' has a specialised meaning. It does not simply mean that any row of data submitted to the API will be retained for thirty days. It actually means that the timestamp field of the row cannot be more than 30 days in the past. Setting up a cloud account is an extremely simple process. As you can see below, the sign-up screen only requires a few basic details:

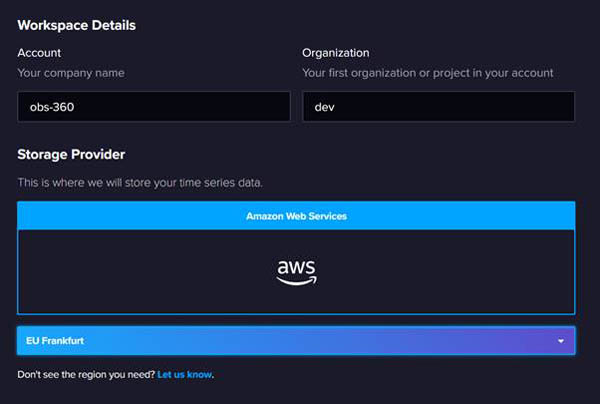

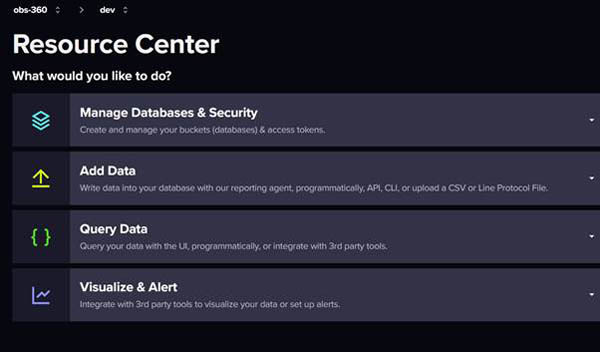

Once we have created our account, we just need to enter some workspace details: And then confirm that we want to use the free plan: Now we are ready to add our data.Adding Data

Our aim is to upload some time series data to InfluxDB and then visualize that data in Grafana. There are numerous methods for uploading data to InfluxDB:

- The InfluxDb API

- The CLI

- Uploading a file via the UI

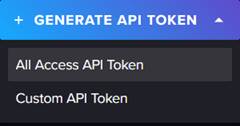

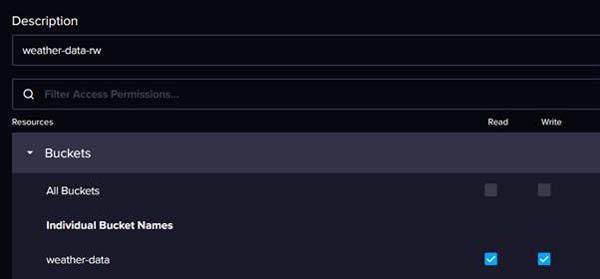

User Configuration

The next thing we are going to do is create an InfluxDB user configuration. This is a set of attributes that the CLI uses when authenticating itself to the InfluxDB API. Saving the details in a configuration saves us having to pass them in every time we make a call to the API: The syntax for creating the configuration is:

.\influx config create --config-name \

--host-url \

--org \

--token

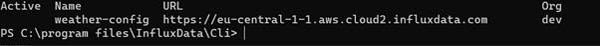

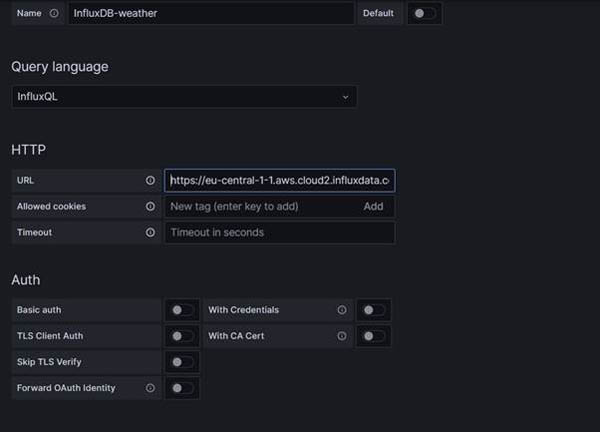

To connect to a cloud instance you enter the fully qualified domain part of the InfluxDb url that you see in your browser. In my case it is: 'https://eu-central-1-1.aws.cloud2.influxdata.com'

You do not need to enter anything after the '.com' segment. The command we will run will therefore look something like this:

.\influx config create�� --config-name weather-config� --host-url� https://eu-central-1-1.aws.cloud2.influxdata.com�� --org dev�� --token 1234

If the command runs successfully, you will see a confirmation like this:

Data Structure and Annotations

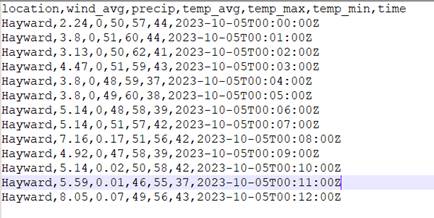

Now it is time for us to get some data into our bucket. In a real-world situation you would probably have a service which was continually streaming data into the InfluxDB API. To keep things simple, we are just going to upload data from a CSV file. Our file is called weather-data-us.csv and it contains 996 records. These are fictitious readings of weather metrics for a single day taken at one minute intervals. You can find this file in our GitHub repo . If you do use this file, you may need to update the values for the time field to ensure that they fall within the last 30 days, otherwise the InfluxDB API will be reject them as being outside of the retention period. The structure of the CSV is shown below:

As you can see, we have a location field followed by values for wind, rain and temperature and then a timestamp field. We are going to use the CLI's 'write' command to upload our data. This just requires us to pass in the name of our file and the name of the bucket we want to write to. Ok, lets go ahead and run our command

.\influx write --bucket "weather-data"� --file "weather-data-us.csv"

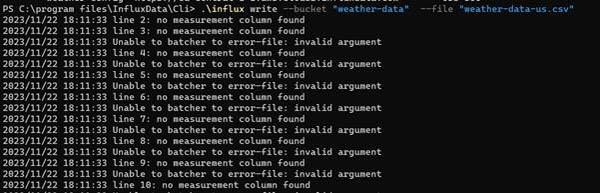

Ooops - kaboom!

Even though we have uploaded a correctly formatted csv file, it is not yet valid for ingestion into InfluxDB. The reason for this is that before InfluxDB can ingest our data, it first of all needs to understand its shape - otherwise it will not be able to store and retrieve the data optimally. We need to provide 'annotations' which describe the data and tell InfluxDB how to process it. In particular, we need to provide a 'measurement' and describe the types of data we are passing. You can find out more about annotations in the InfluxDB Cloud Serverless Documentation

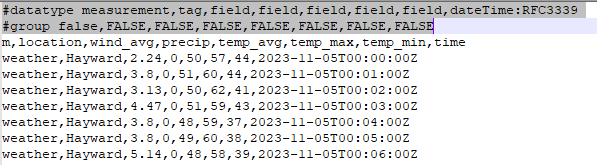

We are now going to update our csv file with the required annotations:

You will see that we have inserted two lines at the top of the file. The first row defines the structure of the data. You will see the first value after the '#datatype' declaration is the word 'measurement'. Every file we submit must define a measurement. This is kind of akin to assigning a table name for our data so that InfluxDB can group our data together logically. We have also inserted a new column with the name of our measurement - i.e. 'weather'. Although this seems a little bit redundant, it makes sense when you realise that we can supply multiple measurements/tables in the same file and InfluxDB needs some way of distinguishing between them. The revised file is named "weather-data-us-2.csv" and is also in the GitHub repo.

We will run our command again - this time pointing to the new file:

.\influx write --bucket "weather-data"� --file "weather-data-us-2.csv"

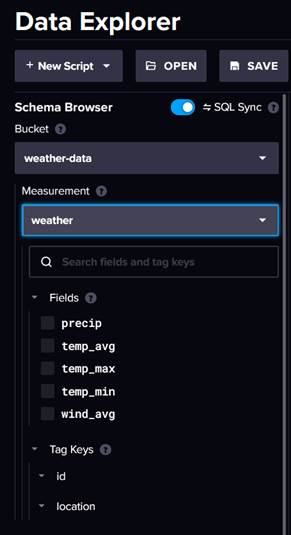

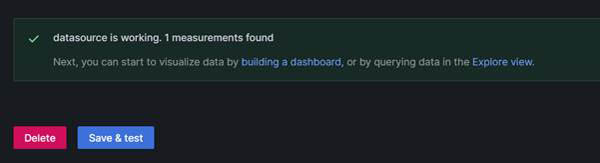

If the Write command succeeds, the CLI does not actually send any response to your terminal. We can, however, verify that the write was successful by checking in the InfluxDB UI. In the Data Explorer we can select our bucket (weather-data) and our measurement (weather), and we will then see a list of fields:

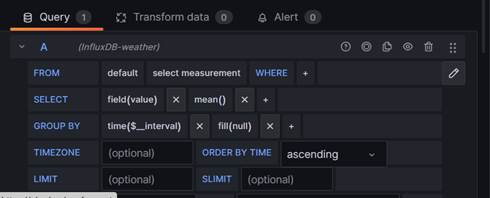

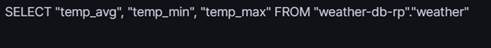

You will also see that the Data Explorer auto-generates a query for us so that we can view our data:

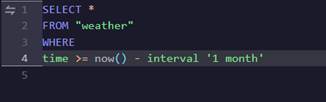

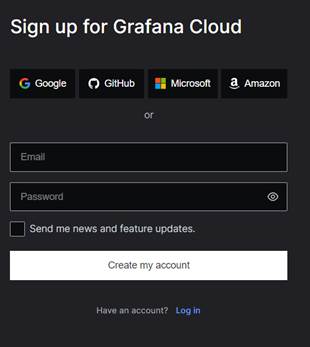

This query defaults to looking at records for the past hour. Our data is not this fresh so we will change the query to bring back data from the past month:

When we run the query, we will see that 996 records are returned:

dbrp Mapping

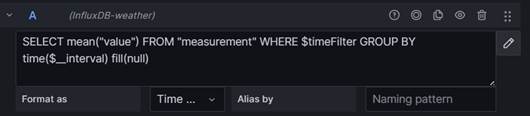

We can now view our data in the InfluxDB UI. However, we have one more configuration step to complete to make our bucket accessible to Grafana. InfluxDB supports different languages for querying our data - including Flux and InfluxQL. Flux is a powerful querying tool, but we are going to use InfluxQL as its syntax is close to the SQL syntax that many of us are familiar with. There is a slight complication when we do this though. There seems to be a bit of a mis-alignment between the current Grafana connectors and different versions of InfluxDB backend API's. In Version 1 of InfluxDB, the backend store was a database and the query language was InfluxQL. With version two, databases were replaced with buckets and Flux became the primary query language. Grafana therefore seems to assume that, if you wish to use InfluxQL as your query language, you are talking to an InfluxDB 1 database rather than an InfluxDB 2 bucket. Appendix 1 below provides some more detail on this.

To get around this discrepancy we need to create a mapping. The mapping takes the name of a database and the name of a retention policy and then maps these to our Bucket. Neither the database nor the retention policy that you define actually need to exist! They are just placeholders to enable us to create a mapping. This does sound a little bit counter-intuitive - on the upside though, the command for creating the mapping is pretty simple. From the InfluxDB command line we just need to run the v1 dbrp create command. This command takes three parameters:

- bucket-id - this is the alphanumeric id, not the name of our bucket

- db - a placeholder database name

- rp - a placeholder retention policy name

.\influx v1 dbrp create�� --bucket-id 12345678�� --db� weatherdb� --rp weather-db-rp -c weather-config

If the command executes successfully, you will see an response like this: