It may seem odd that, despite the increasingly widespread adoption of the OpenTelemetry framework, not much in the way of third-party tooling has emerged. There is DashO’s very handy oTelBin validation tool and the otel-cli utility but not much else besides.

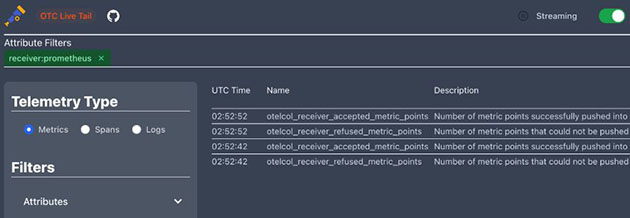

We were very pleased, therefore to recently come across not one, but two very handy tools for debugging the oTel Collector. First up is Tails - an app that runs as a sidecar to your OpenTelemetry Collector. The app developers include Jacob Aronoff of Lightstep and Austin Parker of Honeycomb and it is a lightweight web server that listens on a socket and streams live messages from a Collector. The app supports logs, traces and metrics and also has some cool features such as Play/Pause mode and filtering. The oTel Collector is a great piece of engineering but it can also be a bit of a black box. This is a great tool for providing visibility into the Collector’s telemetry streams.

Whereas Tails runs as a sidecar, oTel Desktop Viewer is a CLI tool that generates visualisations of OpenTelemetry Traces on your local machine. This is a really handy utility for those times when you want to view traces but have not yet got around to setting up an oTel collector or a third-party backend. If you are a strictly command-line oTel ninja then fear not, GitHub user Y.Matsuda has created a version that runs in a terminal. It would be great to see more oTel tooling like these emerging.

If the Observability world had a code of secrecy akin to that of the Magic Circle, then Charity Majors might be in danger of being banished to exile and ignominy. In a single slide deck, she has blown the gaff on a whole trove of insider knowledge. It is the “What they don’t teach you at Harvard Business School” of observability knowledge. Not the abstract theory or technical detail but lessons and insights from the o11y frontline.

The deck in question was used in a talk at the LeadDev event in Berlin earlier this month and its 52 slides are an illuminating distillation of observability wisdom. We actually weren’t present at the talk and only came across the deck thanks to a mention in Michael Hausenblas’s excellent olly news newsletter. However, the slides contain sufficient clues (and images of unicorns) to easily re-construct the narrative and win friends and influence people as an observability savant.

If you are an SRE, when an outage happens you will know about it pretty quick. With security breaches the picture is rather less clear as, by their nature, they are designed to go undetected. Intrusion detection therefore is often based on a mixture of tools designed to spot unusual spikes, suspicious patterns or failed logon attempts.

This article by Fatih Koç argues that one of the major difficulties involved in identifying attacks is that of correlating signals across multiple sources such as Falco, Prometheus, Kubernetes Audit Logs etc. In this article, he outlines a strategy for extracting relevant data from each of these sources and pulling it together into a single observability dashboard.

The first line of cyber defence is normally at the perimeter - preventing attackers from entering your network in the first place. The next line of defence is intrusion detection. This can often take the form of anomaly detection using a variety of heuristics.

There are also some more creative possibilities, such as the canary solution adopted by Grafana. Just as the canary in the coalmine sings to alert underground workers to the presence of toxic gases, Grafana’s canary was designed to alert them to the possible presence of intruders in their domain.

Load testing can be simple in theory but in modern distributed architectures, it involves a lot more than throwing requests at an individual service. This article on the Airbnb engineering blog looks at how the company’s engineers use the Impulse load-testing framework to handle a number of more complex requirements such as dependency mockingand managing messaging and asyncronous calls.

Unfortunately, at the moment Impulse is just an internal Airbnb framework, so you won’t be able to get your hands on it at present. At the same time, the article provides a valuable blueprint for tackling advanced, real world load testing scenarios.

It’s an announcement that might have seemed unthinkable not long ago, but the porting of the revolutionary eBPF technology to Windows is now a reality. The ability to bring safe programmability to the kernel has resulted in enormous gains in fields such as security, networking and observability for Linux hosts, so applying the same principle to the Windows ecosystem is obviously an attractive proposition. It is not, though, without its own difficulties. There were a lot of hurdles to overcome and, inevitably, given the differences in OS architecture, this is not a full-fidelity replica of the Linux implementation.

This possibly foundational article by Pavel Yosifovich guides you through the steps involved in boldly going where few have gone before and creating your first eBPF program for Windows. One paragraph in the article begins with the sentence “this is where things get a bit hairy“ - for some that will likely be a challenge rather than a deterrent. This may not be cooking up nuclear fusion in your bedroom, but it does feel pretty radical.

As well as rolling out their Open AI observability solution, Elastic have also been very active within the OpenTelemetry project. C++ has a reputation for being something of a fearsome foe for observability practitioners. In this article on the Elastic blog, Haidar Braimaanie dons his protective gear and attempts to tame the beast with a soothing dose of OpenTelemetry instrumentation.

Unlike languages built in frameworks such as .NET, C++ does not have a standardized runtime environment that supports dynamic instrumentation across all platforms and compilers. C++ also uses a variety of build systems such as Makefiles and CMake, so that implementing instrumentation can be difficult and error-prone. In the article, Haidar looks at adding OpenTelemetry support to a C++ application running on Ubuntu 22.04. He also includes sample code for instrumenting the project with database spans and then observing the application in APM.

After reading this article you may want to give the C++ developer in your life a hug.

Even if you are not familiar with the name of Brendan Gregg, you are almost certainly familiar with the fruits of his labours. Brendan is the creator of the Flame Graph - one of the most important and iconic visualisations in the observability toolkit.

We featured the Flame Graph in our recent tribute to the work of UX designers in the observability arena - but you should also visit Brendans’ web site.

Brendan’s latest innovation is the AI Flame Chart. This is an evolution of the original flame graph and its ambitious aim is to help reduce the vast financial and environmental costs entailed in the use of LLM’s. This means that whereas the original flame graph was focused on CPU cycles, the latest generation sets its sights on reducing GPU load. The article discusses the considerable complexities involved in mapping GPU programs back to their corresponding CPU stacks.

The names of some of the instruction sets look intimidating to the uninitiated but the basic concept of the graph is quite simple - the wider the bar, the more resource it consumes.

If you have ever had to grapple with a 3,000 line Helm chart to deploy your observability infrastructure, you may be forgiven for thinking that there must be a better way to do this. Whilst YAML has a certain formal elegance, its syntax struggles to express the architectures and relationships embedded in highly complex systems.

Whilst Pulumi have tackled this problem by enabling the use of high level programming languages for IaC, System Initiative are taking a fundamentally more radical approach. Their goal is nothing other than completely reinventing IaC from the ground up. The blog article for the launch of the product is an incredibly ambitious statement of intent. The terms ‘game changer’ and ‘paradigm shift’ tend to be thrown around somewhat casually, this might be a case where their usage is appropriate.

So, what are they proposing? Well, System Initiative is IaC without the code. It is a kind of digital canvas where you manipulate digital twins of your systems. Is the future here or is this the Platform Engineering equivalent of science fiction? Read the article and decide for yourself!